Hi Hail team,

I am writing to get advice on Dataproc issue. I run Hail on Dataproc on GCP.

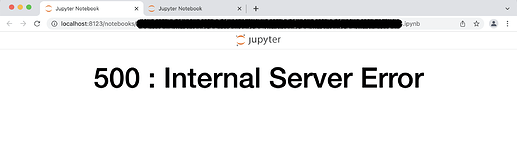

Today, I got “500 internal server error”, which is that I have never seen before until yesterday when I try to open a Jupyter notebook. If you have any idea to solve it, please share with me. Thank you.

Here is my code to launch Dataproc and open the jupyter notebook

hailctl dataproc start ${cluster_name} --vep GRCh38 \

--requester-pays-allow-annotation-db \

--packages gnomad --requester-pays-allow-buckets gnomad-public-requester-pays \

--master-machine-type=n1-highmem-8 --worker-machine-type=n1-highmem-8 \

--num-workers=2 --num-secondary-workers=0 \

--worker-boot-disk-size=1000

hailctl dataproc connect ${cluster_name} notebook

-Jina